🎯 베이지안 최적화로 하이퍼파라미터 튜닝 마스터하기 🚀

안녕하세요, 여러분! 오늘은 머신러닝 세계에서 꼭 알아야 할 초강력 스킬, 베이지안 최적화를 이용한 하이퍼파라미터 튜닝에 대해 알아볼 거예요. 이거 진짜 대박인 거 아시죠? ㅋㅋㅋ 머신러닝 모델 성능 업그레이드하는 비밀 무기라고 할 수 있어요! 😎

우리가 이 주제를 파헤치다 보면, 여러분도 모르는 사이에 AI 전문가로 거듭날 수 있을 거예요. 마치 재능넷에서 새로운 재능을 발견하는 것처럼 말이죠! 자, 그럼 이 흥미진진한 여정을 함께 떠나볼까요? 🚀

💡 Pro Tip: 이 글을 끝까지 읽으면, 여러분도 하이퍼파라미터 튜닝의 달인이 될 수 있어요! 그럼 여러분의 AI 프로젝트가 쑥쑥 자라날 거예요. 마치 재능넷에서 여러분의 재능이 꽃피우는 것처럼요! 🌸

🤔 하이퍼파라미터가 뭐길래?

자, 먼저 하이퍼파라미터가 뭔지 알아볼까요? 이거 진짜 중요해요! 🧐

하이퍼파라미터는 머신러닝 모델의 학습 과정을 조절하는 설정값이에요. 쉽게 말해서, 요리할 때 불의 세기나 조리 시간을 조절하는 것과 비슷하다고 생각하면 돼요. 적절한 하이퍼파라미터 설정은 모델의 성능을 극대화하는 데 꼭 필요하답니다!

예를 들어볼까요? 🤓

- 학습률 (Learning Rate)

- 배치 크기 (Batch Size)

- 에포크 수 (Number of Epochs)

- 은닉층의 수와 크기 (Number and Size of Hidden Layers)

- 정규화 강도 (Regularization Strength)

이런 것들이 바로 하이퍼파라미터예요. 이걸 잘 조절해야 모델이 제대로 작동한다니까요! 😉

🎭 비유 타임: 하이퍼파라미터는 마치 요리사의 비밀 레시피 같은 거예요. 재료(데이터)는 같아도, 이 '비밀 레시피'에 따라 요리(모델)의 맛(성능)이 확 달라지죠! 여러분도 AI 요리사가 되어 최고의 레시피를 찾아보는 거 어때요? 😋👨🍳

그런데 말이죠, 이 하이퍼파라미터를 설정하는 게 생각보다 어려워요. 왜냐고요? 🤔

- 무한한 조합: 하이퍼파라미터의 종류가 많고, 각각의 값 범위도 넓어서 가능한 조합이 거의 무한해요.

- 상호 의존성: 하나의 하이퍼파라미터 변경이 다른 하이퍼파라미터에 영향을 줄 수 있어요.

- 데이터 의존성: 최적의 하이퍼파라미터는 데이터셋마다 다를 수 있어요.

- 계산 비용: 모든 조합을 다 시도해보려면 엄청난 시간과 컴퓨팅 파워가 필요해요.

이런 이유 때문에, 하이퍼파라미터 튜닝은 정말 골치 아픈 작업이 될 수 있어요. 그래서 우리에게 필요한 게 바로... 베이지안 최적화랍니다! 🎉

이 그래프를 보세요. 하이퍼파라미터 튜닝의 어려움을 잘 보여주고 있죠? 시간이 지날수록, 그리고 복잡성이 증가할수록 어려움이 급격히 올라가는 걸 볼 수 있어요. 이런 상황에서 베이지안 최적화가 우리의 구원자가 되어줄 거예요! 😇

자, 이제 베이지안 최적화가 어떻게 이 문제를 해결하는지 알아볼까요? 다음 섹션에서 자세히 설명해드릴게요. 기대되지 않나요? ㅎㅎ 🚀

🧙♂️ 베이지안 최적화의 마법

자, 이제 베이지안 최적화의 세계로 들어가볼까요? 이건 정말 대박이에요! 🎩✨

베이지안 최적화는 확률적 모델을 사용해 최적의 하이퍼파라미터를 찾아내는 똑똑한 방법이에요. 그냥 무작정 시도하는 게 아니라, 이전의 시도들을 바탕으로 "어떤 하이퍼파라미터가 좋을지" 예측하면서 찾아가는 거죠. 마치 보물찾기를 하는 것처럼요! 🗺️💎

🎮 게임으로 이해하기: 베이지안 최적화는 마치 '스무고개' 게임과 비슷해요. 여러분이 생각한 답을 맞추기 위해, 컴퓨터가 똑똑하게 질문을 선택하는 거죠. 각 질문(하이퍼파라미터 설정)마다 더 좋은 추측을 할 수 있게 되는 거예요!

베이지안 최적화의 핵심 요소들을 살펴볼까요? 🔍

- 목적 함수 (Objective Function): 우리가 최적화하고 싶은 대상이에요. 보통 모델의 성능 지표를 사용해요.

- 확률 모델 (Probabilistic Model): 이전 시도들의 결과를 바탕으로 목적 함수를 추정해요. 주로 가우시안 프로세스(Gaussian Process)를 사용하죠.

- 획득 함수 (Acquisition Function): 다음에 어떤 하이퍼파라미터를 시도해볼지 결정해요.

이 세 가지가 어우러져서 마법 같은 일을 해내는 거예요! ✨

이 그림을 보세요. 베이지안 최적화의 세 가지 핵심 요소가 어떻게 상호작용하는지 보여주고 있어요. 목적 함수에서 시작해서, 확률 모델을 거쳐, 획득 함수로 이어지는 과정이 반복되면서 최적의 하이퍼파라미터를 찾아가는 거죠. 멋지지 않나요? 😎

자, 이제 각 요소에 대해 더 자세히 알아볼까요? 🤓

1. 목적 함수 (Objective Function) 🎯

목적 함수는 우리가 최적화하고 싶은 대상이에요. 보통 모델의 성능 지표를 사용하죠. 예를 들면:

- 분류 문제에서의 정확도 (Accuracy)

- 회귀 문제에서의 평균 제곱 오차 (Mean Squared Error)

- 교차 검증 점수 (Cross-validation Score)

목적 함수는 "블랙박스" 함수로 취급돼요. 즉, 입력(하이퍼파라미터)을 넣으면 출력(성능 점수)이 나오지만, 그 내부 작동 방식은 모른다고 가정하는 거죠.

2. 확률 모델 (Probabilistic Model) 🧠

확률 모델은 이전 시도들의 결과를 바탕으로 목적 함수를 추정해요. 가장 많이 사용되는 건 가우시안 프로세스(Gaussian Process)예요.

가우시안 프로세스는 어떤 점에서의 함수 값을 예측할 때, 그 주변 점들의 함수 값을 고려해요. 이게 바로 베이지안 최적화의 "학습" 능력이에요!

🌟 재능넷 Tip: 가우시안 프로세스를 이해하는 건 쉽지 않을 수 있어요. 하지만 걱정 마세요! 재능넷에서 머신러닝 전문가의 도움을 받아 더 깊이 있게 공부할 수 있답니다. 함께 배우면 더 재미있겠죠? 😉

3. 획득 함수 (Acquisition Function) 🕵️♂️

획득 함수는 다음에 어떤 하이퍼파라미터를 시도해볼지 결정해요. 이게 베이지안 최적화의 핵심이라고 할 수 있죠! 주로 사용되는 획득 함수들은:

- 확률적 개선 (Probability of Improvement, PI)

- 기대 개선 (Expected Improvement, EI)

- 상한 신뢰 구간 (Upper Confidence Bound, UCB)

이 중에서 가장 많이 쓰이는 건 기대 개선(EI)이에요. EI는 "탐색(exploration)"과 "활용(exploitation)" 사이의 균형을 잘 잡아주거든요.

자, 이제 베이지안 최적화의 기본 개념을 알게 되었어요. 근데 이걸 어떻게 실제로 적용할 수 있을까요? 🤔 걱정 마세요! 다음 섹션에서 자세히 알아볼 거예요. ready? Let's go! 🚀

🛠️ 베이지안 최적화 실전 적용기

자, 이제 베이지안 최적화를 실제로 어떻게 적용하는지 알아볼 차례예요! 😎 이론은 알겠는데 실전에서 어떻게 쓰는지 궁금하셨죠? 걱정 마세요, 지금부터 상세하게 설명해드릴게요!

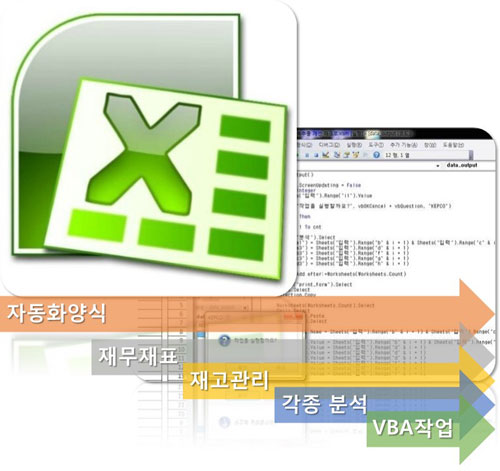

Step 1: 라이브러리 선택하기 📚

베이지안 최적화를 위한 여러 라이브러리가 있어요. 가장 인기 있는 것들을 소개해드릴게요:

- Scikit-Optimize (skopt): 사용하기 쉽고, scikit-learn과 잘 통합돼요.

- Hyperopt: 더 복잡한 최적화 문제를 다룰 수 있어요.

- Optuna: 최신 라이브러리로, 사용하기 쉽고 기능이 풍부해요.

- GPyOpt: 가우시안 프로세스에 특화된 라이브러리예요.

우리는 Scikit-Optimize (skopt)를 사용할 거예요. 왜냐고요? 사용하기 쉽고, scikit-learn과 잘 어울리거든요! 👍

Step 2: 목적 함수 정의하기 🎯

먼저 최적화하고 싶은 목적 함수를 정의해야 해요. 예를 들어, 랜덤 포레스트 분류기의 성능을 최적화한다고 해볼까요?

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

from sklearn.datasets import load_iris

def objective(params):

n_estimators, max_depth, min_samples_split = params

clf = RandomForestClassifier(n_estimators=n_estimators,

max_depth=max_depth,

min_samples_split=min_samples_split,

random_state=42)

return -np.mean(cross_val_score(clf, X, y, cv=5, scoring="accuracy"))

# 데이터 로드

X, y = load_iris(return_X_y=True)

여기서 목적 함수는 랜덤 포레스트의 교차 검증 정확도를 반환해요. 음수를 붙인 이유는 skopt가 기본적으로 최소화 문제를 다루기 때문이에요. 우리는 정확도를 최대화하고 싶으니까 음수를 붙여서 최소화 문제로 바꾼 거죠! 똑똑하죠? 😉

Step 3: 탐색 공간 정의하기 🌌

다음으로, 하이퍼파라미터의 탐색 범위를 정의해야 해요. 이걸 "탐색 공간"이라고 불러요.

from skopt.space import Integer, Real

space = [

Integer(10, 100, name='n_estimators'),

Integer(1, 20, name='max_depth'),

Integer(2, 10, name='min_samples_split')

]

각 하이퍼파라미터에 대해 최소값과 최대값을 지정했어요. 이 범위 내에서 최적의 값을 찾게 되는 거죠!

Step 4: 최적화 실행하기 🚀

이제 모든 준비가 끝났어요! 베이지안 최적화를 실행해볼까요?

from skopt import gp_minimize

res = gp_minimize(objective, space, n_calls=50, random_state=42)

print("Best score: ", -res.fun)

print("Best parameters: ", res.x)

여기서 n_calls는 최적화 과정에서 목적 함수를 호출할 횟수를 지정해요. 많이 할수록 더 좋은 결과를 얻을 수 있지만, 시간도 오래 걸리겠죠?

⚠️ 주의: 너무 많은 n_calls를 지정하면 과적합(overfitting)의 위험이 있어요! 적절한 균형을 찾는 게 중요해요. 마치 요리할 때 간을 맞추는 것처럼요! 🧂

Step 5: 결과 분석하기 📊

최적화가 끝났다면, 결과를 분석해볼 차례예요!

from skopt.plots import plot_convergence

plot_convergence(res)

이 그래프는 최적화 과정에서 목적 함수 값이 어떻게 변화했는지 보여줘요. 점점 좋아지는 걸 볼 수 있겠죠?

이 그래프를 보면, 초반에는 목적 함수 값이 크게 변동하다가 점점 안정화되는 걸 볼 수 있어요. 마지막 지점이 바로 우리가 찾은 최적의 하이퍼파라미터 조합이에요! 👏

Step 6: 최종 모델 학습하기 🏆

이제 찾은 최적의 하이퍼파라미터로 최종 모델을 학습시켜볼까요?

best_rf = RandomForestClassifier(n_estimators=res.x[0],

max_depth=res.x[1],

min_samples_split=res.x[2],

random_state=42)

best_rf.fit(X, y)

짜잔! 🎉 이제 우리는 베이지안 최적화를 통해 찾은 최고의 하이퍼파라미터로 학습된 랜덤 포레스트 모델을 갖게 되었어요!

💡 Pro Tip: 실제 프로젝트에서는 이 과정을 여러 번 반복하거나, 다른 모델과 비교해볼 수 있어요. 마치 재능넷에서 여러 재능을 비교하고 선택하는 것처럼요! 다양한 시도를 통해 최고의 모델을 찾아보세요. 🕵️♀️

자, 여기까지 베이지안 최적화를 실제로 적용하는 방법을 알아봤어요. 어때요? 생각보다 어렵지 않죠? 😊 이제 여러분도 이 강력한 도구를 사용해 여러분의 머신러닝 모델을 업그레이드할 수 있을 거예요!

다음 섹션에서는 베이지안 최적화를 사용할 때 주의해야 할 점들과 고급 팁들을 알아볼 거예요. 기대되지 않나요? 🚀

🧠 베이지안 최적화의 고급 팁과 주의사항

자, 이제 베이지안 최적화의 기본을 마스터하셨네요! 👏 하지만 잠깐, 아직 끝이 아니에요. 더 효과적으로 사용하기 위한 고급 팁들과 주의해야 할 점들이 있거든요. 함께 알아볼까요? 🤓

1. 초기 포인트 설정하기 🎬

베이지안 최적화는 초기 포인트에 따라 결과가 달라질 수 있어요. 그래서 좋은 초기 포인트를 설정하는 것이 중요해요.

from skopt import gp_minimize

# 초기 포인트 설정

initial_points = [

[50, 10, 5], # n_estimators, max_depth, min_samples_split

[80, 15, 3],

[30, 5, 8]

]

res = gp_minimize(objective, space, n_calls=50, x0=initial_points, random_state=42)

이렇게 하면 우리가 알고 있는 좋은 설정들로 시작할 수 있어요. 마치 보물찾기를 할 때 좋은 지도를 가지고 시작하는 것과 같죠! 🗺️

2. 병렬 처리 활용하기 ⚡

베이지안 최적화는 시간이 오래 걸릴 수 있어요. 하지만 병렬 처리를 사용하면 속도를 높일 수 있답니다!

from skopt import gp_minimize

from joblib import Parallel, delayed

def parallel_objective(params):

return Parallel(n_jobs=-1)(delayed(objective)(p) for p in params)

res = gp_minimize(parallel_objective, space, n_calls=50, n_jobs=-1, random_state=42)

n_jobs=-1은 사용 가능한 모든 CPU 코어를 사용한다는 뜻이에요. 이렇게 하면 마치 여러 명이 동시에 보물을 찾는 것처럼 빠르게 최적화할 수 있어요! 🏃♂️💨

3. 조기 종료 (Early Stopping) 설정하기 ⏱️

때로는 최적화가 더 이상 개선되지 않을 때 일찍 멈추는 게 좋을 수 있어요. 이를 "조기 종료"라고 해요.

from skopt.callbacks import EarlyStopper

stopper = EarlyStopper(n_best=5, n_wait=10)

res = gp_minimize(objective, space, n_calls=100, callback=[stopper], random_state=42)

이 설정은 "최근 10번의 시도 동안 상위 5개의 결과가 개선되지 않으면 멈춰!"라는 뜻이에요. 마치 등산할 때 정상에 도달했다고 판단되면 더 이상 올라가지 않는 것과 같죠! ⛰️

4. 하이퍼파라미터 중요도 확인하기 🔍

어떤 하이퍼파라미터가 가장 중요한지 알면 더 효과적으로 최적화할 수 있어요.

from skopt.plots import plot_importance

plot_importance(res)

이 그래프는 각 하이퍼파라미터가 모델 성능에 얼마나 영향을 미치는지 보여줘요. 막대가 길수록 더 중요한 거예요! 이를 통해 어떤 하이퍼파라미터에 더 집중해야 할지 알 수 있죠. 👀

5. 탐색-활용 균형 조절하기 ⚖️

베이지안 최적화에서는 "탐색(exploration)"과 "활용(exploitation)" 사이의 균형이 중요해요. 이를 조절하기 위해 획득 함수(acquisition function)를 선택할 수 있어요.

from skopt import gp_minimize

from skopt.utils import use_named_args

@use_named_args(space)

def objective(**params):

# 목적 함수 구현

res = gp_minimize(objective, space, n_calls=50, acq_func='EI', random_state=42)

acq_func 파라미터로 'EI' (Expected Improvement), 'PI' (Probability of Improvement), 'LCB' (Lower Confidence Bound) 등을 선택할 수 있어요. 각각 특성이 다르니 여러분의 문제에 맞는 걸 골라보세요! 🎭

💡 Pro Tip: 'EI'는 대부분의 경우에 잘 작동하지만, 탐색을 더 하고 싶다면 'PI'를, 빠른 수렴을 원한다면 'LCB'를 시도해보세요. 마치 요리할 때 불 조절하는 것처럼, 상황에 맞게 조절하는 게 중요해요! 🔥

주의사항 ⚠️

- 과적합 주의: 베이지안 최적화도 과적합될 수 있어요. 검증 세트를 따로 두고 평가하는 것이 좋아요.

- 계산 비용: 목적 함수 평가가 오래 걸리면 전체 최적화 과정도 오래 걸려요. 가능하다면 간단한 프록시 목적 함수를 사용해보세요.

- 차원의 저주: 너무 많은 하이퍼파라미터를 동시에 최적화하려고 하면 효과가 떨어질 수 있어요. 중요한 것들만 선택하세요.

- 로컬 최적화: 베이지안 최적화도 로컬 최적점에 빠질 수 있어요. 여러 번 실행해보는 것도 좋은 방법이에요.

자, 이제 여러분은 베이지안 최적화의 고급 사용법까지 알게 되었어요! 🎓 이 강력한 도구를 활용하면 여러분의 머신러닝 모델은 한층 더 업그레이드될 거예요. 마치 재능넷에서 새로운 재능을 발견하고 키우는 것처럼 말이죠! 😉

이제 남은 건 실전에서 적용해보는 거예요. 여러분만의 프로젝트에 베이지안 최적화를 적용해보세요. 그리고 그 결과를 공유해주시면 좋겠어요. 함께 성장하는 게 가장 큰 즐거움이니까요! 🌱

베이지안 최적화의 세계에 오신 것을 환영합니다. 이제 여러분은 AI 마법사가 된 거예요! 🧙♂️✨ 앞으로의 여정을 응원할게요. 화이팅! 💪

- 지식인의 숲 - 지적 재산권 보호 고지

지적 재산권 보호 고지

- 저작권 및 소유권: 본 컨텐츠는 재능넷의 독점 AI 기술로 생성되었으며, 대한민국 저작권법 및 국제 저작권 협약에 의해 보호됩니다.

- AI 생성 컨텐츠의 법적 지위: 본 AI 생성 컨텐츠는 재능넷의 지적 창작물로 인정되며, 관련 법규에 따라 저작권 보호를 받습니다.

- 사용 제한: 재능넷의 명시적 서면 동의 없이 본 컨텐츠를 복제, 수정, 배포, 또는 상업적으로 활용하는 행위는 엄격히 금지됩니다.

- 데이터 수집 금지: 본 컨텐츠에 대한 무단 스크래핑, 크롤링, 및 자동화된 데이터 수집은 법적 제재의 대상이 됩니다.

- AI 학습 제한: 재능넷의 AI 생성 컨텐츠를 타 AI 모델 학습에 무단 사용하는 행위는 금지되며, 이는 지적 재산권 침해로 간주됩니다.

재능넷은 최신 AI 기술과 법률에 기반하여 자사의 지적 재산권을 적극적으로 보호하며,

무단 사용 및 침해 행위에 대해 법적 대응을 할 권리를 보유합니다.

© 2025 재능넷 | All rights reserved.

댓글 0개